A short overview of the Big O Notation

Some quick examples of algorithms described with the Big O Notation.

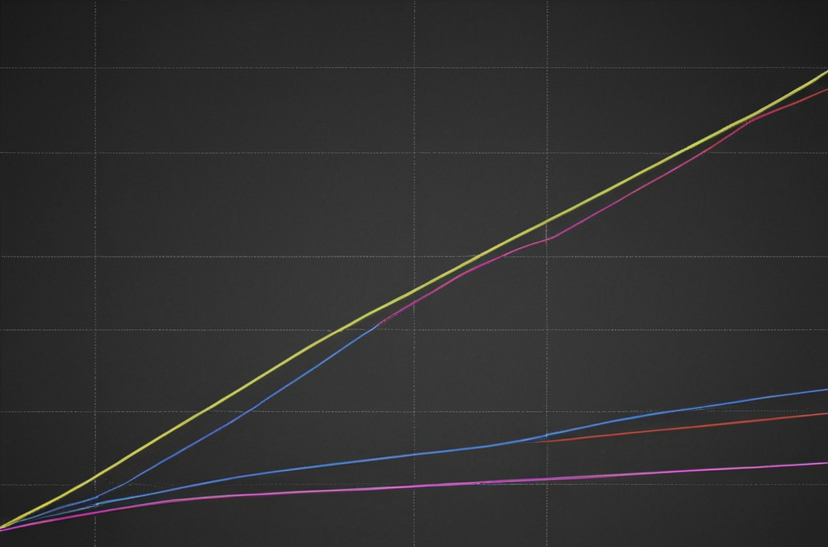

Not really representative of anything... But it looks nice :-)

Short recap

This notation is also called Landau Symbols and describes how many operations are needed to terminate the given algorithm. The amount of operations depends on the amount of input data (in the following examples called n).

Constant algorithms

O(1) => The number of operations is always the same, regardless of the input quantity.

public static int[] doSomething(int[] myArray) {

for(int i = 0; i < 3; i++) {

myArray[i] = 0;

}

return myArray;

}

If the array has a minimum length of 3 (otherwise the function would not work), it does not matter which values are in the array myArray, and the loop would run through 3 times and fill each place in the array with the number 0.

Linear algorithms

O(n) => The number of operations increases linearly with the input quantity.

public static int[] doSomething(int[] myArray) {

for(int i = 0; i < myArray.length; i++) {

myArray[i] = 0;

}

return myArray;

}

The function will now run through the array from start to finish (this is due to myArray.length). Now the for loop is executed as often as the array is long.

Square algorithms

O(n^2) => The number of operations increases as the square of the input quantity.

public static int[] doSomething(int[] myArray) {

for(int i = 0; i < myArray.length; i++) {

for(int j = 0; j < myArray.length; j++) {

myArray[j] = 0;

}

}

return myArray;

}

This function is very meaningless, but it shows that the inner for-loop runs through the entire outer for-loop for each pass.

Two factors

O(n * m) => The algorithm is dependent on n and m.

public static int[] doSomething(int[] n, int[]m) {

for(int i = 0; i < n.length; i++) {

for(int j = 0; j < m.length; j++) {

n[i] = 0;

m[j] = 0;

}

}

return myArray;

}

Logarithmic algorithms

O(log n) => Halving after each execution.

This is always the case when the array to be searched is halved. (e.g: Start at 100 elements; 2nd pass: 50; 3rd pass 25; 4th pass 12; 5th pass: 6; 6th pass: 3; 7th pass 1 ; Here, odd numbers are always rounded down and single-element arrays are considered sorted).

public static void doSomething(int n) {

int i = 1;

while(i < n) {

i = i * 2;

System.out.println(i);

}

}

Other algorithms

O(n log n) => Superlinear complexity => lies between O(n) and O(n^2). Occurs, for example, when a loop is formed via a tree search (e.g. optimized sorting algorithms such as Quicksort).

O(2^n) => Exponential complexity => the runtime doubles when the amount of data increases by one unit (e.g. forming all pairs of a set, towers of Hanoi as a recursive algorithm).

O(n!) => Factorial complexity => the runtime increases with the factorial of the data volume (e.g. traveling salesman problem).