Newshub

I thought to myself: Wouldn't it be cool to have at least one database with lots of data to play around? It would be even better, if that data would be some kind of useful. That's when I started collecting news with my Newshub project.

The start page is kind of minimal, showing the most recent news collected and all of the providers available

A few facts ⚡ about the project:

- The collector is running (almost nonstop) since november 2021.

- As of now (mid october 2024), there are about 290.000 news items from 13 providers in the database.

- The database has a size of about 200 MB

Main features/components

There are several (this is a comprehensive overview)

Provider? What is that?

An example for a provider

Providers are simply sources of news (news outlets, magazines and other feeds). These providers have one or multiple RSS- and/or Atom-feeds (I am always capturing one per provider). These feeds contain the news items and are normal XML-files with a few different flavours that can be parsed by anybody (but not commercially republished).

These feeds are updated regularily (the frequency depends on the provider) and contain new and old items (old get flushed out, new appear, some change).

The Collector

Your typical console application

To write this kind of collector was the easiest part. It is written in Python and only has a few dependecies (one of them is the well known HTTP-Library requests). It also contains one self written Python library of mine: atomicfeed.

I wrote atomicfeed for parsing feed-xml files (like the ones mentioned above). It automatically detects what kind of feed it is dealing with and parses out information like the news items and meta data. It is a small but powerfull library which can be easily extended with more formats.

The other part is the scheduler of this component. It calculates which news providers need to be updated next and when (because each provider has a different update interval). I also implemented multithreading for a big performance boost.

One big pitfall I came across had to do with emojis 🎉 and the general encodings of these feed files (not everybody simply uses utf-8 😢). That lead to me filtering out emojis from headlines and news descriptions entirely (which is sad but not a crucial problem in my opinion).

The API

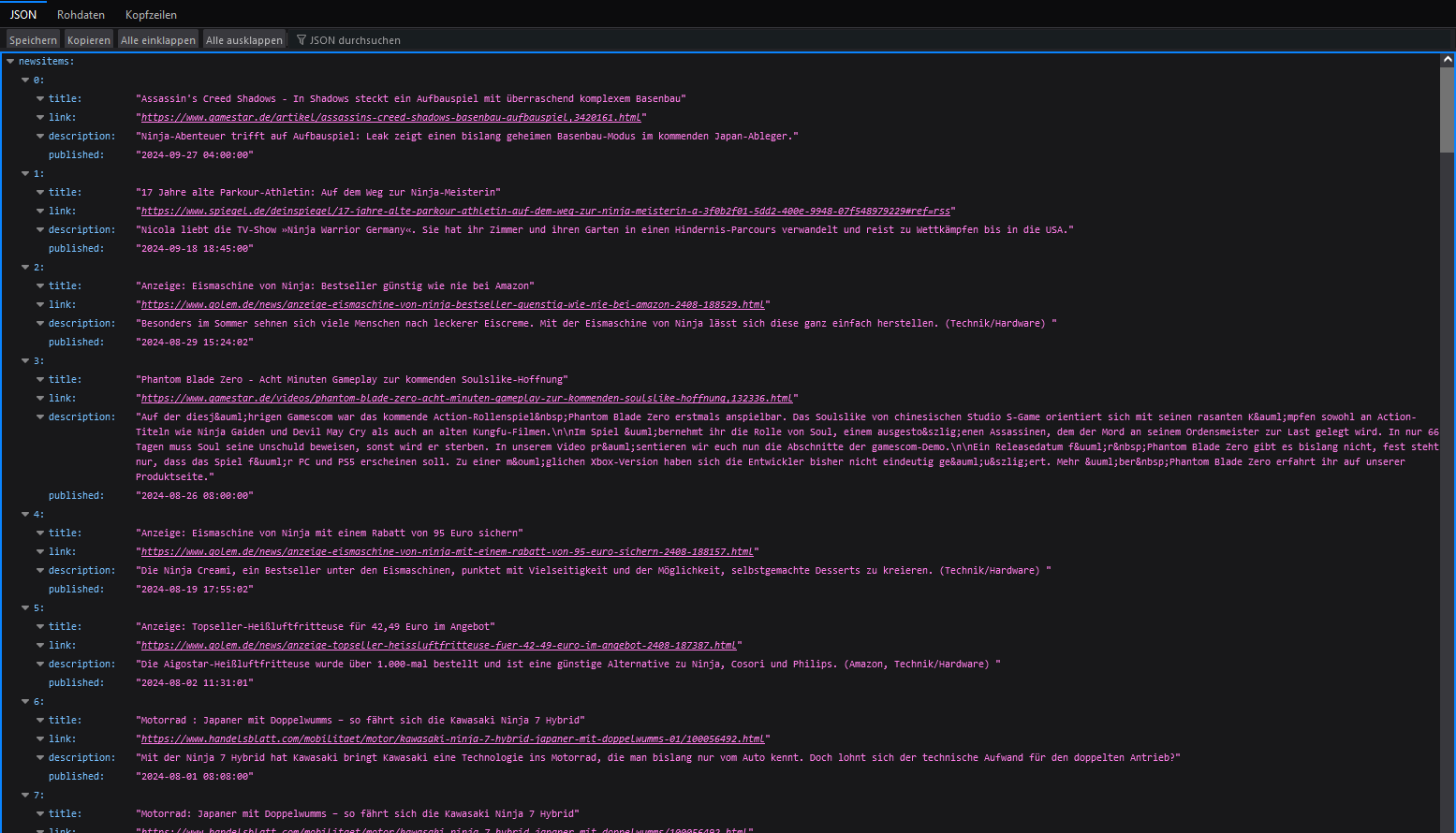

A sample search (API)

The API is used for searching. It has 4 Modes for retrieving information:

- Retrieving news items from a specific provider

- Retrieving information about a specific provider

- Searching through news items and providers

- Comprehensive overview for the frontend (newest news etc.)

For now, the only consumer of the API is the frontend, but I am planning to build more microservices around Newshub to use the information inside the database. Some will create predictions and trends analysis others might do some geography 🌎.

A cross platform Frontend

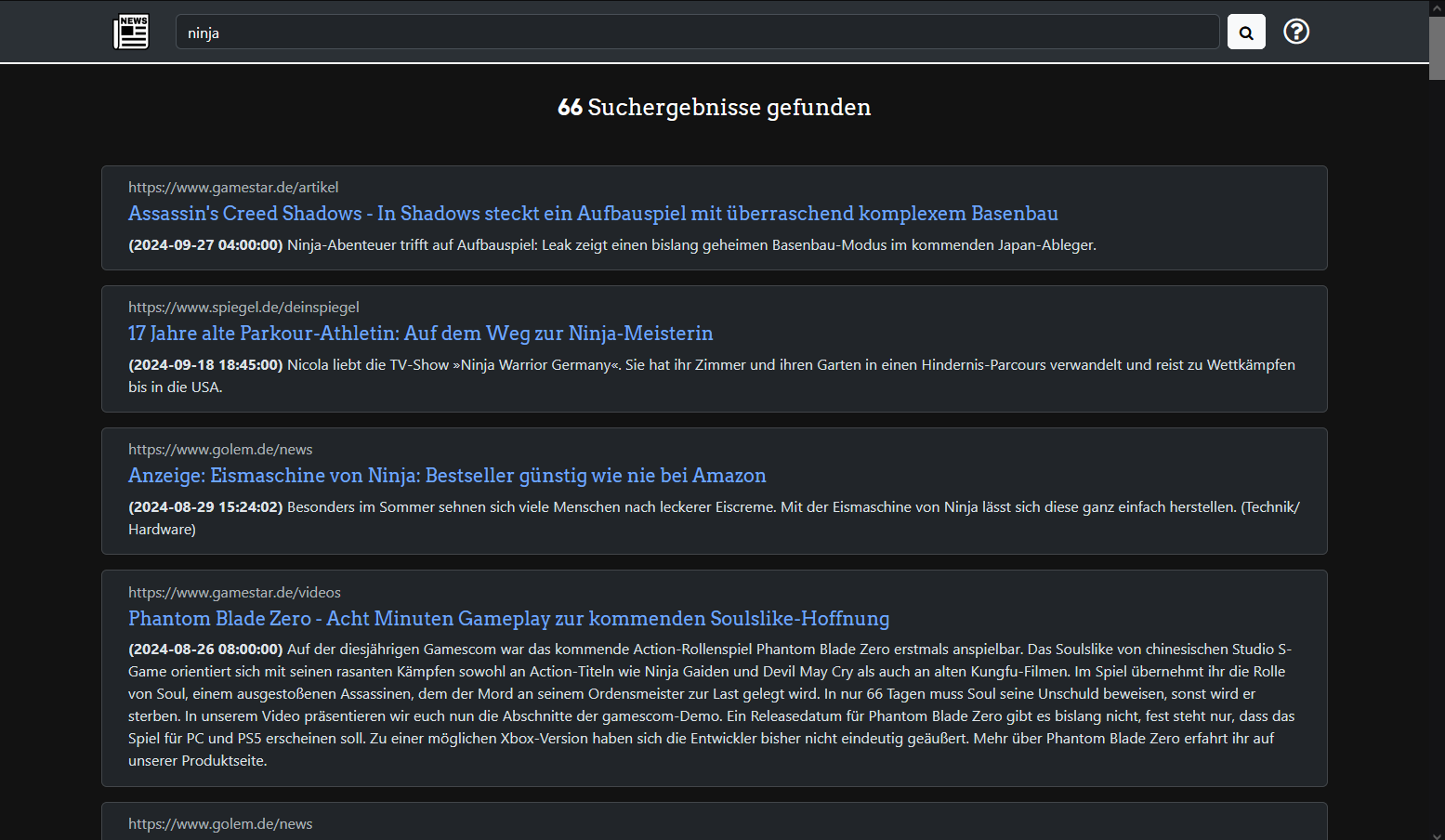

A sample search (Frontend)

Frontends can be very challenging. Not just finding a design that looks decent, but also when it comes to handling lots of data. In the beginnig that wasn't a big concern of mine (I had no idea how long and how much I wanted to collect 😁). But sooner or later, the search and provider pages couldn't handle the API responses anymore and I introduced a pagination.

The old version of the frontend was written in plain HTML, CSS and JavaScript (without any libraries). While optimizing and changing the frontend code I noticed that the whole thing started to look a bit wild, and I later switched to Bootstrap so that I could focus on the other areas. The new version is designed to search through and show a lot of data.

The Widget

Use one or multiple

Widgets are a simple way to integrate content from other websites into your own. I thought that would be a cool opportunity to implement a widget by myself (other side projects were not really suitable for this type of feature).

You only need to create one simple <div> which contains two file includes and one small line of JavaScript and you are set with one of these nice and informative containers.

Live Demo // Source code

For this project I can provide a link to my hosted instance which I use on a daily basis. Take a look around...

💡 Loading times can be a bit slow from time to time and the whole interface is german, just like most of the news.